Cross entropy is the most widely used loss function for supervised training of image classification models. In this paper, we propose a novel training methodology that consistently outperforms …

Consequently, the accuracy of UCPD is significantly lower than SCPD, highlighting the effectiveness of supervised contrastive learning in enhancing the discriminative power and …

We propose a novel extension to the contrastive loss function that allows for multiple positives per anchor, thus adapting contrastive learning to the fully supervised setting.

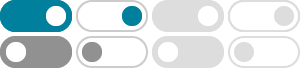

In Fig. 1, we compare the training setup for the cross-entropy, self-supervised contrastive and su-pervised contrastive (SupCon) losses. Note that the number of parameters in the inference …

Informed by the insights gained through our metrics, we propose two new supervised contrastive learning strate-gies tailored to binary imbalanced datasets. These ad-justments are easy to …

We propose ASCL, an Adversarial Supervised Contrastive Learning framework, which adapts the contrastive learning scheme to adversarial examples and further extends the self-supervised …

Supervised contrastive learning (SCL) (Khosla et al.,2020) aims to learn generalized and discrim- inative feature representations given labeled data.